For many organizations, Informatica PowerCenter has been the workhorse of their data integration for years, even decades, reliably driving ETL processes and populating data warehouses that feed BI reports. However, this longevity often leads to a complex environment that can hinder agility and innovation.

Think of it like planning a move from your home office. What started as a tidy, well-structured workspace gradually turns into a mess of old documents, outdated tech, and redundant systems. Planning a move – or in this case, a migration – becomes a challenge of its own.

This is exactly what many teams face when it’s time to modernize PowerCenter.

The Challenge: Decades of Accumulated Complexity

Over time, PowerCenter environments often evolve into tangled webs of legacy code, tribal knowledge, and unnecessary workflows. The result? Slower innovation, higher cost, and increased risk.

Common challenges we see:

- Code Buildup: Redundant workflows, mappings, and transformations proliferate over time. It’s like having multiple copies of the same document, making it hard to know which is the latest version.

- Knowledge Gaps: Staff turnover results in a lack of understanding of the existing PowerCenter code and outdated source-target mapping documents. It’s like inheriting a complex machine without an instruction manual.

- Increased Risk and Cost: All of this complexity makes modernization projects lengthier, more expensive, and riskier.

The Solution: Paradigm’s Accelerator – the Key to Faster and Cheaper IDMC Migrations

The Paradigm Accelerator is a powerful solution designed to solve these challenges head-on. It provides in-depth analysis of your PowerCenter environment, acting as a “code detective” and organizational tool.

How it works:

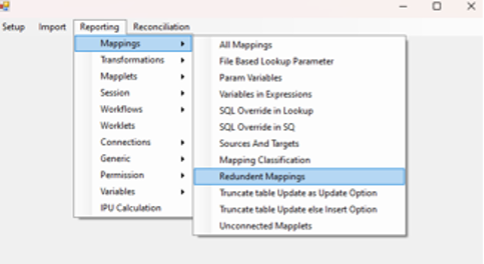

- Identify Duplicates: Pinpoint redundant workflows, mappings, and other assets for removal.

- Map Your Environment: See a clear picture of your PowerCenter landscape, regardless of its age or complexity.

- Understand What You Have: Uncover deep insights into 180+ asset attributes, from transformations to SQL overrides and session details.

By doing this upfront analysis, you’re not just organizing your digital home, you’re setting the stage for a faster, safer, and smarter move to the cloud.

- De-risk your migration by minimizing errors and rework for a smoother transition.

- Lower cloud costs (FinOps) by avoiding migrating and running unnecessary processes in IDMC.

- Accelerate modernization by reducing the time, cost, and effort of the project.

The 5-Step Plan: Roadmap to PowerCenter Modernization

Modernizing isn’t just about moving code, it’s about transforming your environment for long-term value. Paradigm’s proven 5-step methodology guides you from strategy to execution:

- Understand Your Organization: Determine your organizational structure and data ownership model.

- Assess Your Environment: Inventory your PowerCenter assets. (The Accelerator automates this!)

- Define Migration Scope: Decide which assets to migrate and which to retire.

- Leverage the Accelerator’s Capabilities: The Paradigm Accelerator works with PowerCenter CDI workbench and Informatica conversion tooling.

- Plan for Thorough Testing: Use Cloud Data Validation automation to confirm accuracy and performance post-migration.

Paradigm’s Proven Success

Paradigm brings deep expertise to PowerCenter modernization projects. Our team is certified in IDMC and PowerCenter and includes members with experience from Informatica Professional Services.

When a major financial services provider with 80+ years of history partnered with Paradigm, the goal was clear: migrate from legacy PowerCenter to IDMC without disrupting operations.

Results we delivered:

- 400% increase in migration efficiency using the Accelerator

- Eliminated on-prem infrastructure costs

- 8X performance improvement of reusable assets

- Thousands of mappings automatically converted

Why the Cloud? The Benefits of IDMC

Modernizing to Informatica’s Intelligent Data Management Cloud (IDMC) using the Paradigm Accelerator unlocks serious advantages:

- Agility and Scalability: Adapt to changing needs and scale resources easily.

- Improved Performance: Faster data processing for real-time decisions.

- Cost Efficiency: Reduce infrastructure and maintenance costs.

- Access to Innovation: Easily integrate AI, machine learning, and serverless computing.

Ready to Modernize? Paradigm Can Guide Your Way

If you’re ready to modernize your PowerCenter environment and unlock the speed, savings, and intelligence of the cloud, Paradigm is here to help.

Paradigm, an Informatica Platinum Partner and recipient of Informatica’s 2024 Growth Channel Partner of the Year award, will be at Informatica World 2025 (May 13-15, Mandalay Resort, Las Vegas, NV) to discuss how our expertise can benefit your PowerCenter modernization Initiatives.

Connect with us! Visit our booth at Informatica World or schedule a consultation today to learn more.

Deepak Rameswarapu, Senior Director of Data Management